Key Takeaways

- Research shows how AI is deepening the digital divide.

- Some AI algorithms are baked in bias, from facial recognition that may not recognize Black students to falsely flagging essays written by non-native English speakers as AI-generated.

- Join us and our partner organization, the International Society for Technology in Education (ISTE), for a webinar series on AI in education.

From using AI robots as tutors to using spell check to correct written assignments, AI has found itself in classrooms across the country, like Melissa Gordon’s. The high school business teacher in Ann Arbor, Mich. started using AI in her classes over a year ago.

“I saw the amazing things other teachers were doing with it in their classrooms. So, I started typing things into [ChatGPT] and came up with different ways to use it in class,” Gordon says.

One of the many ways she began using it was in her business principles and management class. Gordon decided to have her students use ChatGPT to help with resume writing. “We dove into the ethics of ChatGPT and the appropriate ways to use this technology,” she says.

She saw this as an opportunity to teach students to use AI as a tool not as a substitute for learning. “Students are being exposed to it either way. If we teach them how to use it for good, then I think they will see it as a tool,” Gordan says.

As schools across the country begin to implement AI technology in classrooms, there are also growing concerns about a widening digital divide that could potentially stem from it.

AI May Deepen the Digital Divide

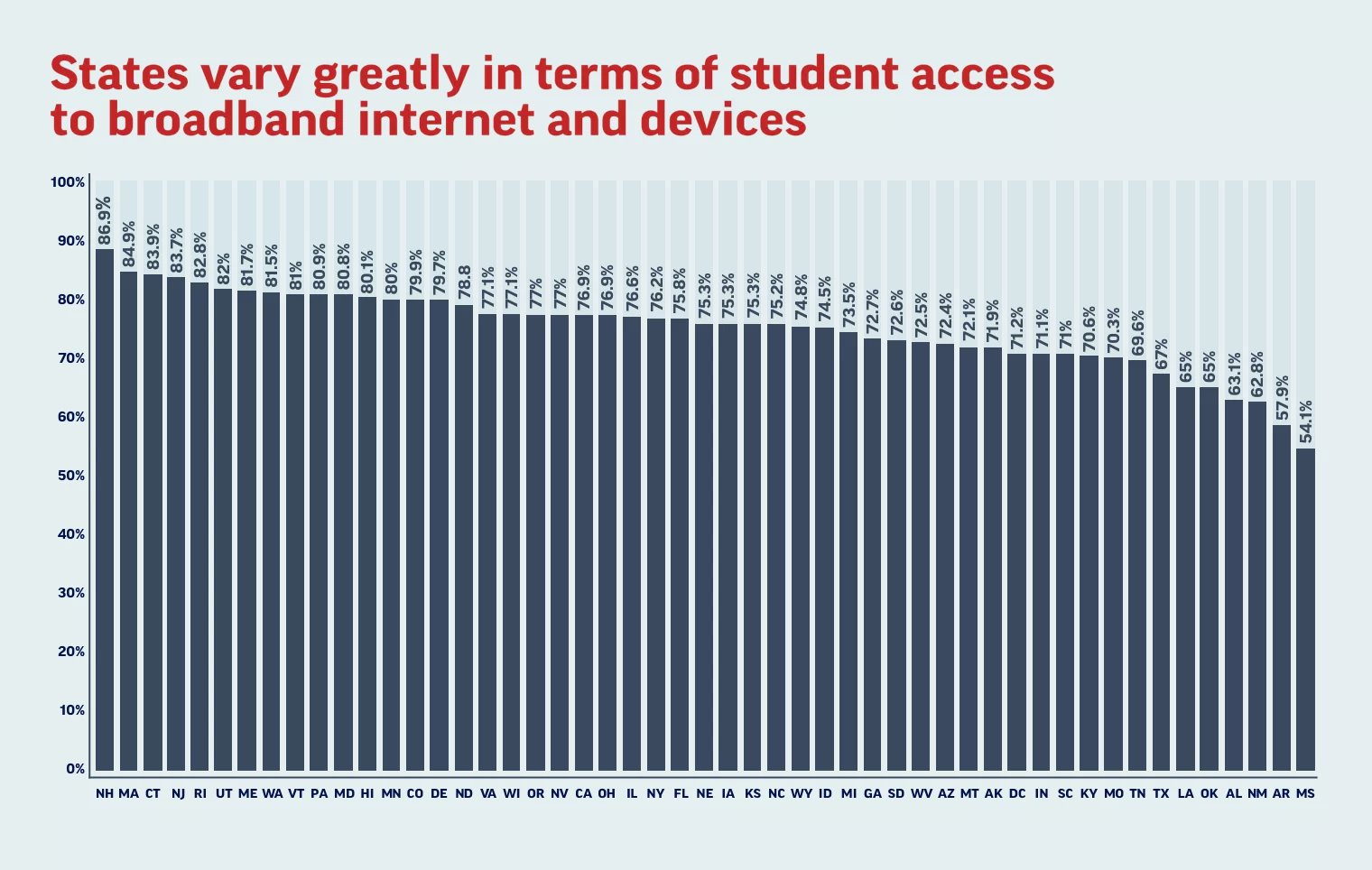

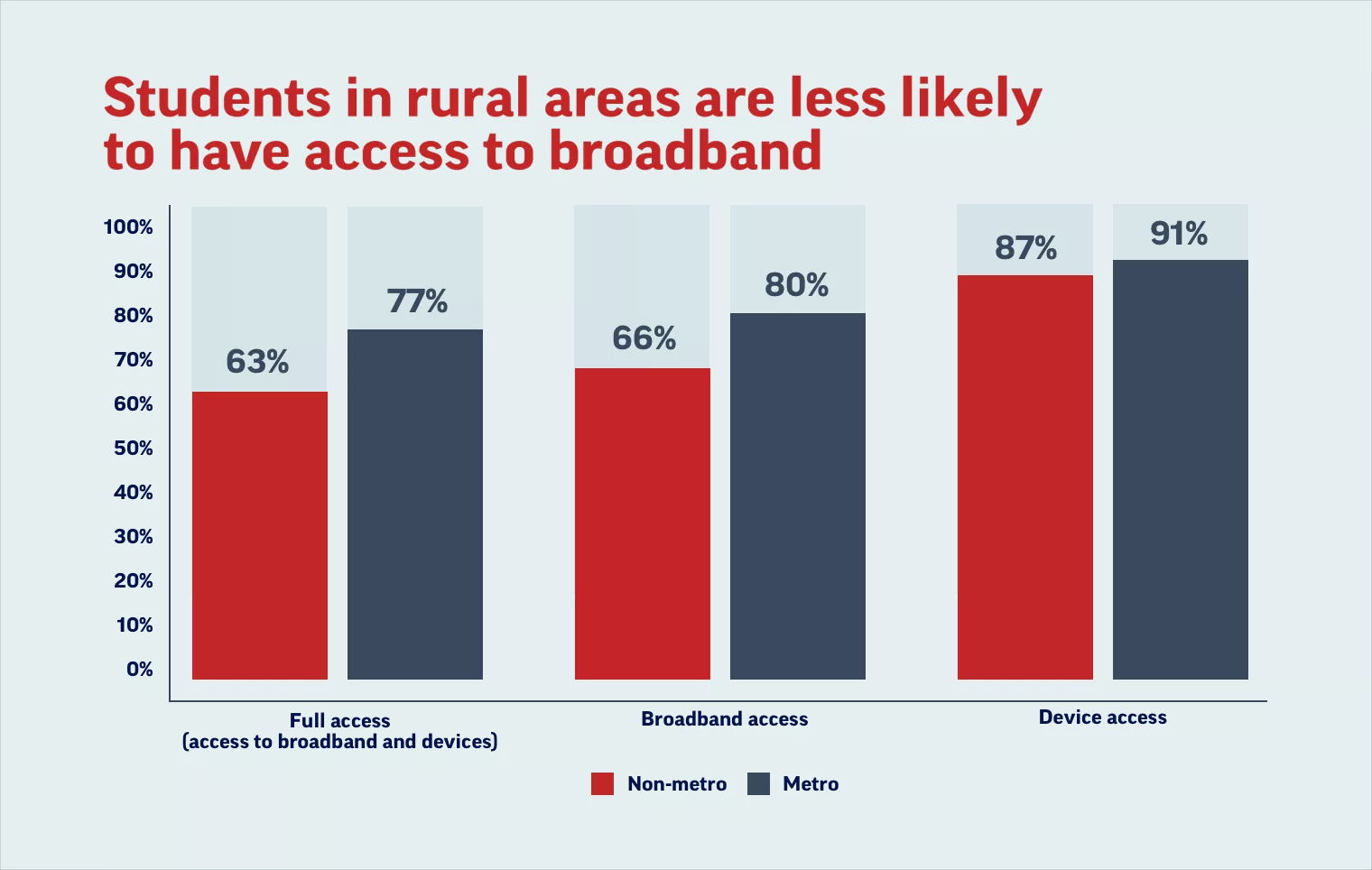

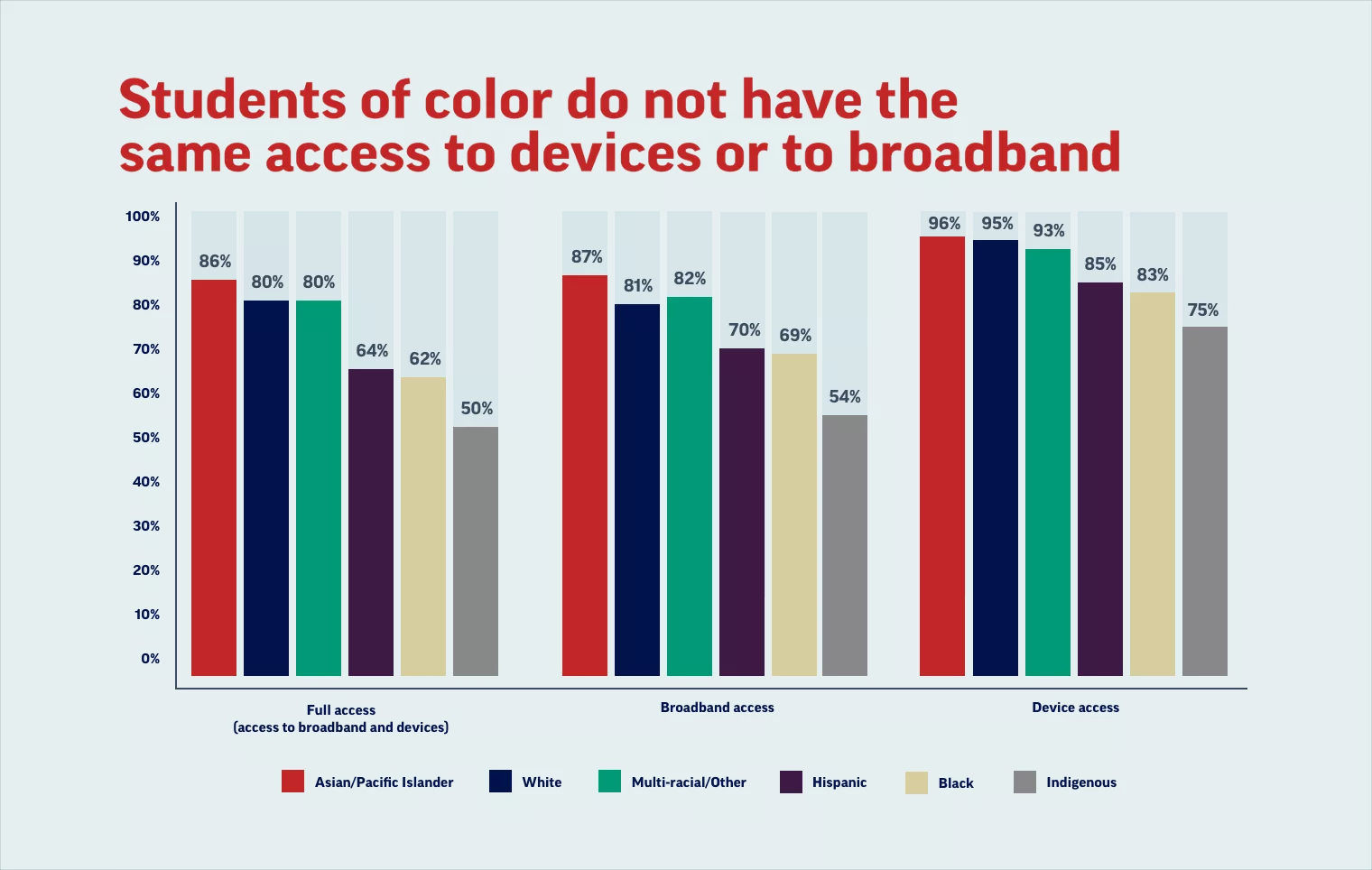

NEA research reveals that an estimated one quarter of all school-aged children live in households without broadband access or a web-enabled device such as a computer or tablet. This inequality is systematically related to the historical divisions of race, socioeconomic status, and geography.

Misty Freeman, an educator and unconscious bias coach, has researched the intersections of bias, technology, and neuroscience and its impact on Black women and girls. Freeman says she has seen the ways in which technology has impacted Black students.

One of the many ways is through access. The digital divide in the United States separates internet access and technology in rural areas—regardless of race. When factoring in race or ethnicity, the divide is further exacerbated, typically in southern areas with higher Black populations.

“There’s not enough awareness and access to AI in the south because of the digital divide,” Freeman says. According to a report by the Joint Center for Political and Economic Studies, “Thirty-eight percent of African Americans in the Black rural south do not have access to home internet.”

This disparity, in turn, leaves southern students and teachers with limited access to information and new technological advancements.

NEA Findings

Biases Baked into Algorithms

Research has also shown that as these tools are growing in their scope and abilities to mimic characteristics of human intelligence, their biases are expanding as well.

According to IBM, AI bias refers to algorithms that “produce biased results that reflect and perpetuate human biases within a society, including historical and current social inequality.”

AI bias, for example, has been seen to negatively affect non-native English speakers, where their written work is falsely flagged as AI-generated and could lead to accusations of cheating, according to a Stanford University study.

For young Black girls in particular, “Facial recognition and technology may not resemble them. Or there could be biased language models that can perpetuate harmful stereotypes,” says Freeman.

Scientists from MIT found that a language model thinks that “flight attendant,” “secretary,” and “physician’s assistant” are feminine jobs, while “fisherman,” “lawyer,” and “judge” are masculine.

Meanwhile, researchers at Dartmouth found language models that have biases, like stereotypes, baked into them. Their findings suggested, for example, that a particular group of people are either or good or bad at certain skills (assuming that someone holds a certain occupation based on their gender).

Diversifying AI Creators

But bias inside the classroom is not new for Black students and other students of color, making it even more critical for educators and developers to understand the way AI can affect students of marginalized groups.

“Black students face unconscious bias without technology,” Freeman says. “So having developers of AI that do not further perpetuate that bias is important.”

Diversifying the creators of AI has a direct impact on the data that the algorithms produce. Because AI is based on past data, mirroring stereotypes or biases that are already present, they can unknowingly amplify these stereotypes in classrooms.

“AI should only supplement what is being done in the classroom in order to level the playing field for students of color,” Freeman says.

The introduction of AI in classrooms is filled with opportunity for growth. However, its effects on students of color requires a strategic and collaborative approach, Freeman says.

“Equity goes beyond our classrooms and our region, So, policy makers need to get information from the majority of educators to figure out what is needed especially for schools in rural areas,” she says.

AI Guidance for Educators

Currently, there have not been any federal policies on AI in education; although, the U.S. Department of Education (ED) has released guidance on this topic.

Additionally, the Biden administration released an extensive executive order on AI that calls on ED to, among other things, develop an “AI toolkit” for education leaders implementing recommendations from the education department’s AI and the Future of Teaching and Learning report. The recommendations include appropriate human review of AI decisions, designing AI systems to enhance trust and safety, and developing education-specific guardrails.

Some states have provided official guidance for the best ways to integrate AI in the classroom. But mostly, teachers have been left to their own devices to decide whether to utilize it in their instructional activities.

Organizations, like the International Society for Technology in Education (ISTE), have partnered with educators and students to give them the tools to be more efficient and confident with the AI driven technologies. They are focused on giving everyone, especially people from marginalized groups, the access needed to be empowered users of this new technology.

Because AI is not going away anytime soon, it becomes increasingly more important for teachers and students to feel confident in their use of it. As different AI technologies continue to advance, Michigan’s Melissa Gordon hopes “students will have a safe space to experience AI and figure out the best ways it can be used.”